In this blog, I will present a modular multi-agent system for collaborative participation in artificial intelligence tasks. In this system, agents with differing task-specific capabilities work cooperatively toward achieving a research challenge. Each participating agent has been assigned a specific role, and they collaborate to create a final result through inter-agent communication. Attached are the code snippets illustrating this framework, making it accessible for exploration and application in various domains.

Attached are the code snippets illustrating this framework, making it accessible for exploration and application in various domains.

Introduction

Multi-Agent Systems (MAS) represent a paradigm where multiple independent agents work together to solve complex problems in a more efficient way than single systems. Inspired by human team dynamics, MAS splits tasks among specialized agents that enable parallelism, adaptability, and modularity.

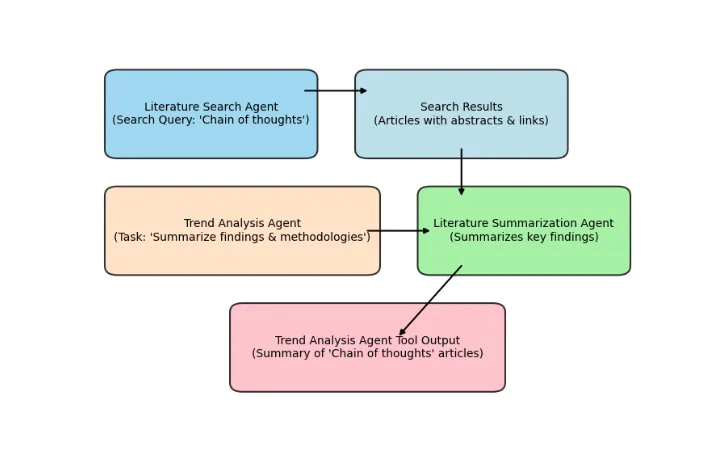

In this work, I explore how MAS can streamline research tasks. The system utilizes four agents:

- SearchAgent: Gathers relevant publications based on a research topic.

- TrendAgent: Identifies trends and patterns in the collected data.

- SummarizationAgent: Extracts concise insights from the findings.

- ManagerAgent: Oversees and coordinates the workflow.

Framework Overview

To implement this multi-agent system, we leverage the CrewAI framework, It's designed to be developed in a modular architecture wherein the tasks are presented as independent, standalone units. In such architecture, every task is given to a particular agent with specific capabilities that can be carried out by a more focused and efficient agent. The modularity offers flexibility, scalability, and adaptability to the scientific field in many aspects.

Here is the code snippet on environment configuration and startup of the framework:

!pip install --q crewai crewai_tools

Next, we import the necessary libraries:

from crewai import Agent, Task, Crew

from crewai import LLM

Large Language Model Configuration

The system employs the NVIDIA NeMo framework as the foundation for LLM deployment and customization to achieve high performance and adaptability. Specifically, it uses LLaMA 3.3, which is a open source transformer model with 70 billion parameters optimized for domain-specific language tasks.

- Model Provider: NVIDIA NeMo

- Model Version: LLaMA 3.3

- Parameter Scale: 70 billion parameters, enabling fine-grained contextual understanding and sophisticated semantic inference.

- Temperature Settings: Adjusted according to task to balance exploration and determinism .

The system is designed with a dual-LLM architecture, and we can use multiple LLMs from different providers, enabling seamless interoperability between distinct models. This will allow us to select the most suitable model for a given task, optimizing computational efficiency and output quality.

llm1 = LLM(

model="nvidia_nim/meta/llama-3.3-70b-instruct",

api_key=secret_key,

temperature=0.7

)

llm2 = LLM(

model="nvidia_nim/meta/llama-3.3-70b-instruct",

api_key=secret_key,

temperature=0.7

)

Setting up the agents

Literature search agent

- Queries databases such as PubMed and Google Scholar.

- Retrieves relevant papers on a given topic.

SearchAgent = Agent(

role="Literature Search Agent",

goal="Query scientific databases to retrieve relevant research on {topic}.",

backstory="""You are a specialized agent adept at navigating databases

like PubMed, arXiv, and Google Scholar to find the latest and

most relevant studies on {topic}.""",

allow_delegation=True,

memory=True,

cache=True,

tools=[SearchTool()],

llm=llm1

)

Summarization Agent

- Extracts methodologies, findings, and gaps from the retrieved literature.

SummarizationAgent = Agent(

role= "Literature Summarization Agent",

goal= """Analyze retrieved research papers and extract detail

summaries on {topic}, highlighting methodologies, findings, and gaps.""",

backstory= """You are an expert in natural language processing

and scientific comprehension, capable of summarizing complex

research into actionable insights.""",

allow_delegation=True,

memory=True,

cache=True,

llm=llm2

)

Trend Analysis Agent

- Identifies trends and knowledge gaps from summarized data.

TrendAgent = Agent(

role="Trend Analysis Agent",

goal="""Analyze summarized literature to detect emerging trends,

cross-disciplinary insights, and knowledge gaps in {topic} research.""",

backstory= """You specialize in synthesizing large volumes of information to identify

patterns, trends, and opportunities for further exploration.""",

allow_delegation=True,

memory=True,

cache=True,

llm=llm1

)

Project Manager Agent

- Orchestrates the workflow, ensuring quality and timely completion.

manager = Agent(

role="Project Manager",

goal="Efficiently manage the crew and ensure high-quality task completion",

backstory="""You're an experienced project manager, skilled in overseeing

complex projects and guiding teams to success. Your role is to coordinate

the efforts of the crew members, ensuring that each task is completed

on time and to the highest standard.""",

allow_delegation=True,

)

Defining Tasks

Tasks are defined as modular components, each assigned to a specific agent. The following tasks form the workflow:

Search Task

Searches for literature based on a given topic

SearchTask = Task(

description="""

Perform an automated search for scientific literature on {topic}.

- share your finding with other agents

INPUT: {topic} - A specific topic or keyword.

COMMUNICATION PROCESS:

1. The SearchAgent queries scientific databases to retrieve relevant articles.

2. The retrieved articles are organized into categories based on relevance and topic.

3. The results are passed to the SummarizationAgent for further processing.

OUTPUT FORMAT:

A structured list of retrieved articles with titles, abstracts, and links to full text.

""",

expected_output="A curated list of relevant literature with links and abstracts, ready for summarization.",

agent=SearchAgent

)

Summarization Task

Summarizes findings from the retrieved literature.

SummarizationTask = Task(

description="""

Summarize the findings, methodologies, and implications of retrieved articles

on {topic} accumlated by Literature Search Agent.

articles - A list of articles with titles, abstracts, and links.

COMMUNICATION PROCESS:

1. The SummarizationAgent analyzes each article, extracting key findings, methodologies, and conclusions.

2. The summaries are organized into thematic categories.

3. The results are passed to the TrendAgent for trend analysis.

OUTPUT FORMAT:

A collection of detail summaries organized by theme, highlighting key findings and gaps.

""",

expected_output="A set of detail summaries of the retrieved literature, categorized by thematic relevance.",

agent=SummarizationAgent

)

Trend Analysis Task

Detects patterns and research gaps from the summarized data.

TrendAnalysisTask = Task(

description="""

Analyze summarized literature to identify trends, emerging areas of research, and interdisciplinary connections in {topic}.

INPUT: summaries - A collection of summaries categorized by theme. given by other agents

COMMUNICATION PROCESS:

1. The TrendAgent analyzes the summaries to detect patterns, recurring themes, and emerging trends.

2. Gaps in existing research are identified, and potential areas for further exploration are suggested.

3. Key insights and recommendations are compiled into a cohesive report.

OUTPUT FORMAT:

A structured report detailing:

- Identified trends and emerging areas of research

- Cross-disciplinary connections

- Research gaps and recommended areas for future exploration

""",

expected_output="A comprehensive report highlighting trends, gaps, and recommendations for future research on {topic}.",

agent=TrendAgent

)

Running the Crew

The agents are grouped into a Crew, orchestrated by the manager. The workflow kicks off with the following command:

text= "Chain of thoughts"

result = research_crew.kickoff(inputs={"topic": text})

Conclusion

This multi-agent framework underscores the transformative potential of collaborative artificial intelligence in accelerating the process of scientific discovery. By automating labor-intensive and time-consuming tasks, the framework empowers researchers to focus on high-level analysis, strategic decision-making, and creative problem-solving. Integrating modular LLMs ensures adaptability, scalability, and efficiency, making it a powerful tool for diverse research workflows.

Future iterations of this framework are envisioned to incorporate advanced capabilities such as automated code interpretation, retrieval-augmented generation (RAG), reinforcement learning with human feedback (RLHF) for multi-agent optimization, interactive visualization dashboards, and real-time collaborative features. These enhancements will further streamline research processes, fostering innovation and enabling seamless collaboration across disciplines. This framework represents a significant step towards AI-driven research ecosystems, paving the way for more efficient and impactful scientific advancements.

About the author

Saurab Mishra

BSMS student at the Indian Institute of Science Education and Research, Thiruvananthapuram.

Expected to graduate by 2028

Posts: 2

Latest post: December 10th

Data Science and AI enthusiast with expertise in LLMs, AI, and MAS.